This post is Part 1 of a 3-part series derived from TrustInSoft’s latest white paper, “How Exhaustive Static Analysis can Prove the Security of TEEs” To obtain a FREE copy, CLICK HERE.

Modern consumer technology is increasingly complex, connected, and personalized. Our devices are carrying more and more sensitive data that must be protected.

We need to be able to trust our devices to protect both themselves and our data from malevolent hackers. In essence, we need that our devices guarantee us a high level of trust.

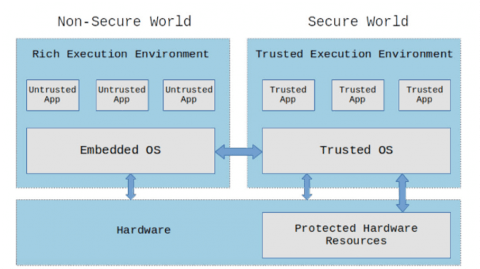

A trusted execution environment (TEE) is a secure area within a processor designed to provide the level of trust we require. It is an environment in which the code executed and the data accessed are both isolated and protected. It ensures both confidentiality (no unauthorized parties can access the data) and integrity (nothing can change the code and its behavior).1 Figure 1 illustrates the partitioning of a trusted execution environment (“Secure World”) within a processor.

Figure 1: Partitioning a system using a trusted execution environment (Source: #embeddedbits) 2

TEEs are essential components of many of the devices we own and use, including smartphones, tablets, game consoles, set-top boxes, and smart TVs. They are well-suited to providing security for applications like storage and management of device encryption keys, biometric authentication, mobile e-commerce applications, and digital copyright protection.

For a TEE to fulfill its function, however, the behavior of its code must be perfectly deterministic, reliable, and impervious to attack. Therefore, it must be free of software errors that could be either sources of anomalous behavior or vulnerabilities for hackers to exploit.

But TEEs are made up mostly of code. Lots and lots of code. While not as extensive as rich operating systems, they’re still complex beasts.

When we write code, we introduce bugs. That’s simply inevitable, due to the complexity of today’s applications and the propensity for human error in complex processes like software development. The larger and more complex the piece of code, the greater the number of bugs introduced, and the more difficult it is to detect and eliminate those bugs. According to data pipeline management and analytics firm Coralogix:3

For many applications—most consumer apps, for example—removing bugs is not a great concern. As long as a bug isn’t a showstopper, it can be cleaned up in a future release.

For applications where safety, reliability, or security are critical, however, failure to find and remove bugs could lead to disaster. A TEE is one such application. The problem, then, is finding an efficient way to eliminate those undefined behaviors.

Traditional software testing consists of taking the software requirements, which have been developed by decomposing and refining the top-level system requirements, developing a test plan and test cases that cover those requirements as thoroughly as possible, and then testing the software against those requirements. You take the requirements and brainstorm your test cases. In the end, you hope that the tests you’ve performed have adequately verified that the software meets its requirements and that the code exhibits no anomalous behaviors under real-world conditions.

The process is an iterative one based on finding bugs and fixing them. It might also be called a “best-effort” process; you do the best you can to find all the bugs you can in the time you have budgeted for software testing.

This traditional process, however, offers no guarantees that every last bug has been eliminated.

The common tools on the market supporting traditional software testing are typically designed to uncover obvious errors. Most search for patterns that are recognized as bad coding techniques.

Unfortunately, such tools are not designed to find more uncommon, subtle, and insidious errors. Thus, they provide no guarantee that whatever happens in the environment, your software will be impervious to attack, and that it’s not going to crash.

Some software bugs are very subtle. They may be triggered by use cases not envisioned by test planners. Such bugs often remain latent until triggered by an unexpected event… or until exploited by an industrious and ill-intentioned hacker.

Traditional software testing has failed many companies when it comes to security from cyberattacks. Here are just two recent examples:

Besides lacking a guarantee, traditional software has also proven to be a very expensive method for validating critical software—software that needs to be flawless, like that in a TEE.

In our next post, we’ll examine why that is true. We’ll also look at why industries that place a high premium on reliability or safety began moving away from traditional software testing over twenty years ago, and why developers who need to assure device security must now travel a similar path.

If you don’t want to wait for the rest of the series, download our new white paper, “How Exhaustive Static Analysis Can Prove the Security of TEEs.” Besides the content of this blog series, the white paper also looks briefly at a pair of companies that have adopted this technology to validate trusted execution environments. Plus, we provide some tips on what to look for when choosing the solution that’s right for your organization. You can download your free copy here.

This post is Part 1 of a 3-part series derived from TrustInSoft’s latest white paper, “How Exhaustive Static Analysis can Prove the Security of TEEs” To obtain a FREE copy, CLICK HERE.

1 Prado, S.; Introduction to Trusted Execution Environment and ARM’s TrustZone; #embeddedbits, March 2020.

2 Ibid.

3 Assaraf, A.; This is what your developers are doing 75% of the time, and this is the cost you pay; Coralogix, February 2015.

4 465,000 Abbott pacemakers vulnerable to hacking, need a firmware fix; CSO, September 2017.

5 Snow, J.; Top 5 most notorious cyberattacks; Kaspersky, December 2018.